Did you know VMware Elastic Sky X (ESX) was once called ‘Scaleable Server’?

May 12, 2014 Leave a comment

VMware has been a vendor of servervirualization software for a long time. This story will inform about the history of VMware ESX(i) and uncover the various names used in the history of ESX(i).

VMware was founded in 1998. The first ever product was VMware Workstation released in 1999. Workstation was installed on client hardware.

For server workloads VMware GSX Server was developped. This is an acronym for Ground Storm X. Version 1.0 of GSX Server was available around 2001. It was required to install on top of Windows Server or Linux making GSX a Type 2 hypervisor just like Workstation. The product was targeted at small organizations. Remember we are talking 2001!

GSX 2.0 was released in summer 2002. The last version available of GSX Server was 3.2.1 released in December 2005.Thereafter GSX Server was renamed to ‘VMware Server’ available as freeware. VMware released version 1.0 of VMware Server on July 12, 2006. General support for VMware Server ended in June 2011.

However VMware realized the potential of server virtualization for enterprises and was working on development of a type 1 hypervisor which could be installed on bare metal.

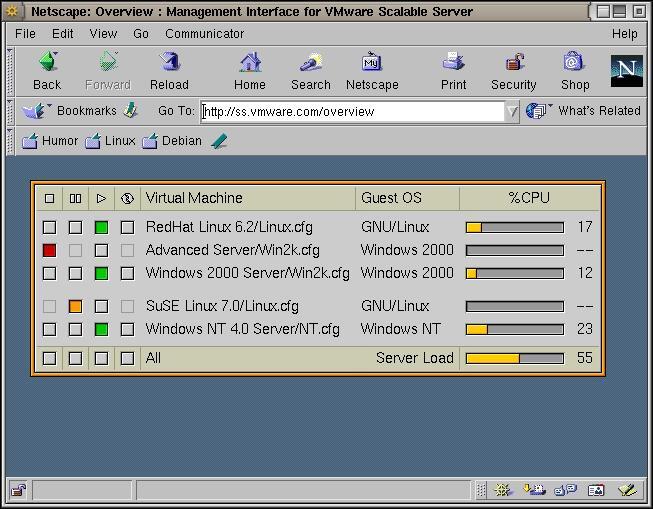

‘VMware Scaleable Server’ was the first name of this product currently known as ESXi. See this screenshot provided by Chris Romano @virtualirishman on his Twitter feed. This must be around 1999 or 2000.

After a while the name was changed to ‘VMware Workgroup Server‘. This was around year 2000. Hardly no reference can be found on internet for these two early names.

In March 2001 ‘VMware ESX 1.0 Server‘ was released. ESX is short for Elastic Sky X. A marketing firm hired by VMware to create the product name believed Elastic Sky would be a good name. VMware engineers did not like that and wanted to add a X to sound it more technical and cool.

VMware employees later started a band named Elastic Sky with John Arrasjid being the most well known member.

ESX and GSX were both available for a couple of years. ESX was targeted at enterprises. It was not untill around 2005/2006 before ESX got some traction and organizations started to use the product.

ESX had a Service Console. Basically a Linux virtual machine which allows management of the host and virtual machines. Agents could be installed for backup software or other third party tools.

Development started for a replacement of ESX. The replacement would not have the service console. In September 2004 the development team showed the 1st fully functional version to VMware internal staff. The internal name was then VMvisor (VMware Hypervisor). This would become ESXi 3 years later.

At VMworld 2007 VMware introduced VMware ESXi 3.5. Before that the software was called VMware ESX 3 Server ESXi edition but this was never made available. This screenshot shows the name ‘VMware ESX Server 3i 3.5.0’ . ESX and ESXi share a lot of similar code.

ESXi has a much smaller footprint than ESX and can be installed on flash memory. This way it can be almost seen as part of the server. The i in ESXi stands for Integrated.

At the release of vSphere 4.0 (May 2009) the ‘ESX Server’ was renamed to just VMware ESX.

Up to vSphere 4.1 VMware offered two choices for customers: VMware ESX which has the Linux console and ESXi which had and still has the menu to configure the server. Mind you have still access to a limited command line by pressing Alt-F1.

Since vSphere 5 ESX is not longer available.

Similar to the hypervisor the management software changed names a couple of times as well. VMware VMcenter is so old Google cannot find any reference for it. It might also be used as an internal name only. Here is a screendump taken from here

At December 5 2003 VMware released VirtualCenter. It was used to manage multiple hosts, (ESX Server 2.1) and virtual machines .

In May 2009 VMware released ‘vCenter Server 4.0‘ as part of vSphere 4.0. vCenter Server from now on was the new name for VirtualCenter. The last version released of VirtualCenter was 2.5

Sources used for this blog:

Wikipedia VMware ESX

vladan.fr VMware ESXi was created by a French guy !!!

VM.blog What do ESX and ESXi stand for?

yellow-bricks.com vmware-related-acronyms/

Some more images of old VMware products are here

V2V Converter is a simple to use, basic converter tool targeted at the Small and medium business market. During the conversion process the source VMware virtual machine will needs to be shutdown.

V2V Converter is a simple to use, basic converter tool targeted at the Small and medium business market. During the conversion process the source VMware virtual machine will needs to be shutdown.